- Details

1. Videos about ChatGPT

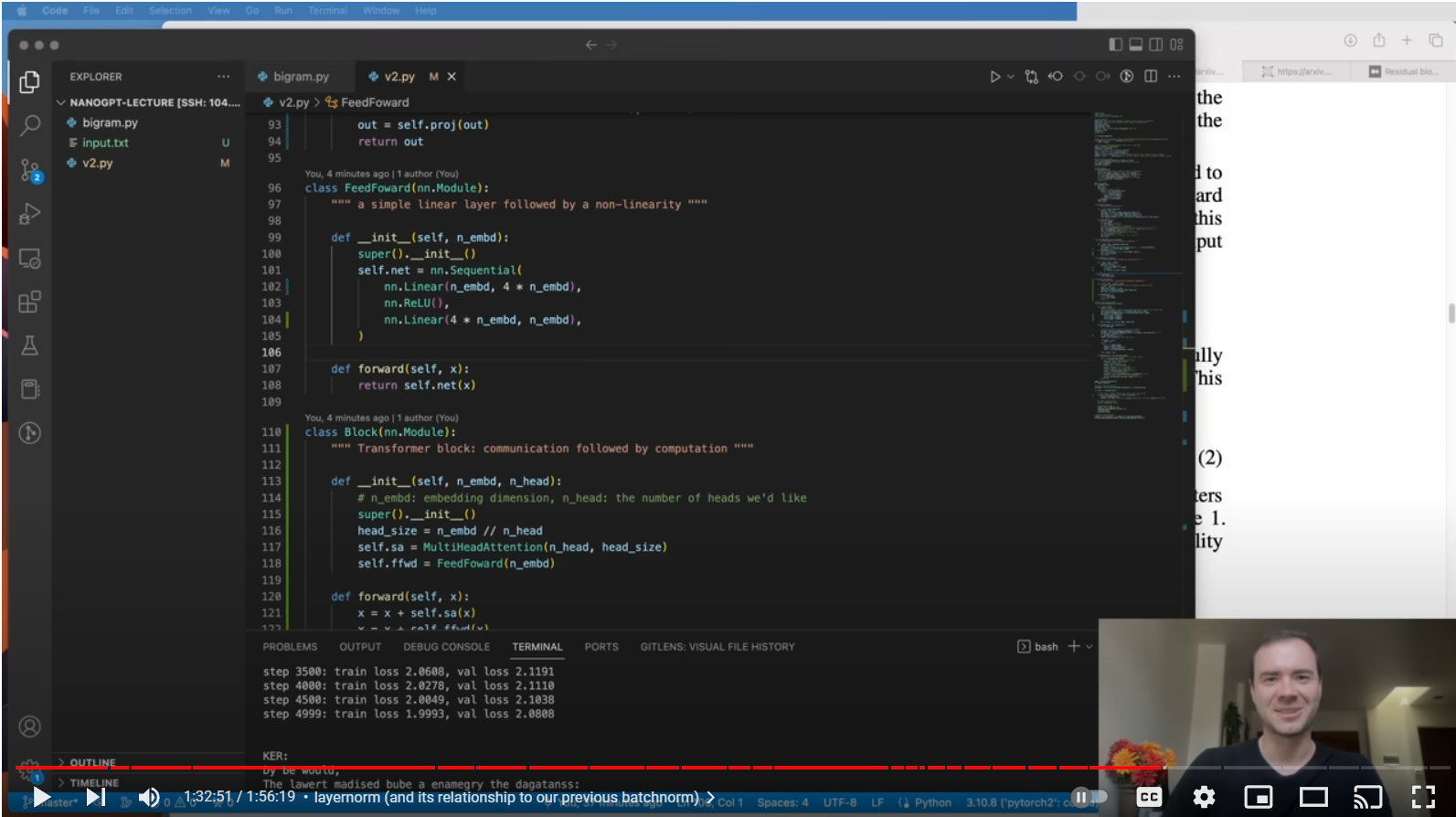

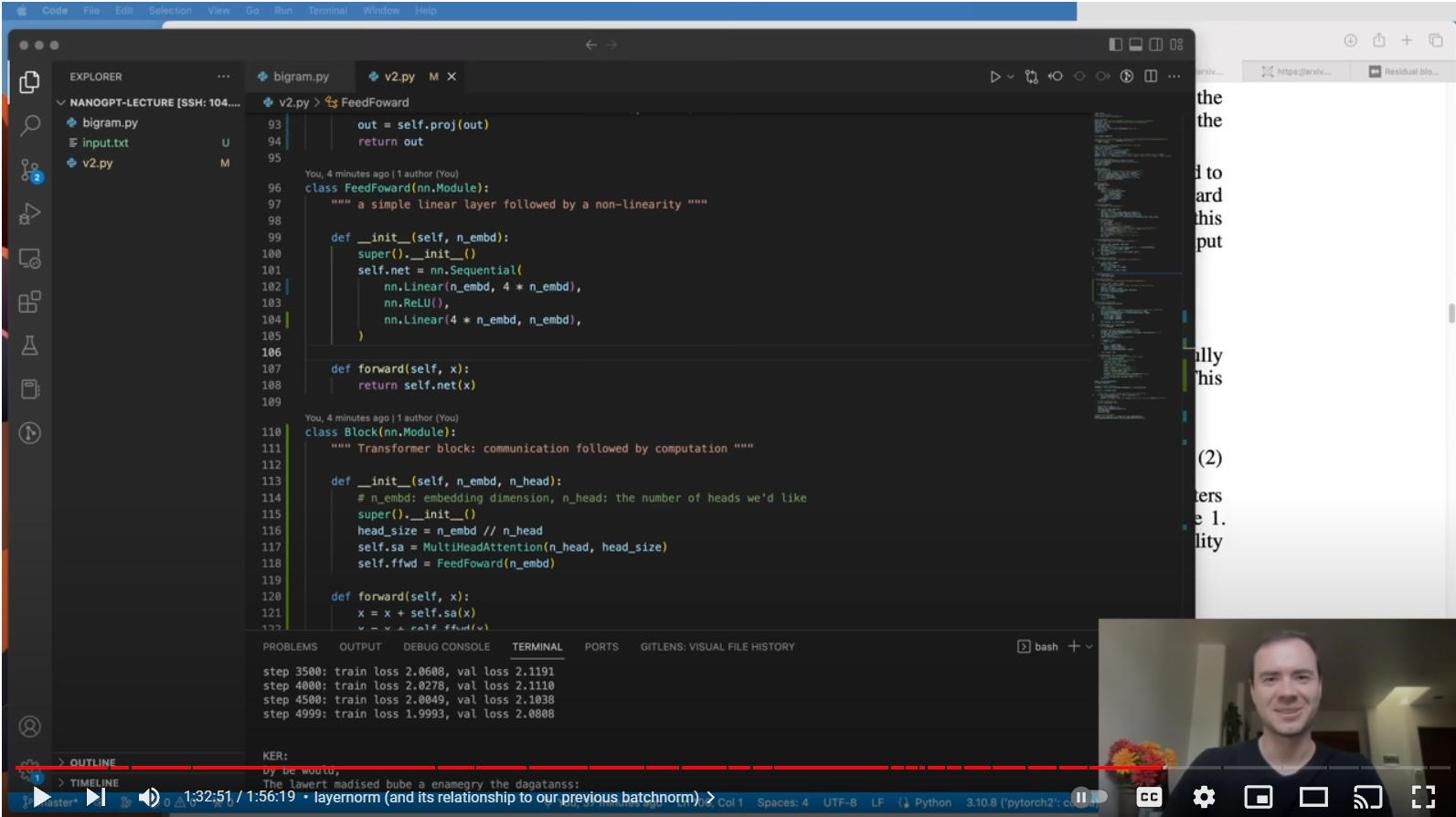

The best way to understand the inner workings of ChatGPT we found in Internet is this video of Andrej Karpathy, former head of Tesla Autopilot. He explains in detail how to write in Python a generative GPT bot for Shakespeare-like texts.

Let's build GPT: from scratch, in code, spelled out.

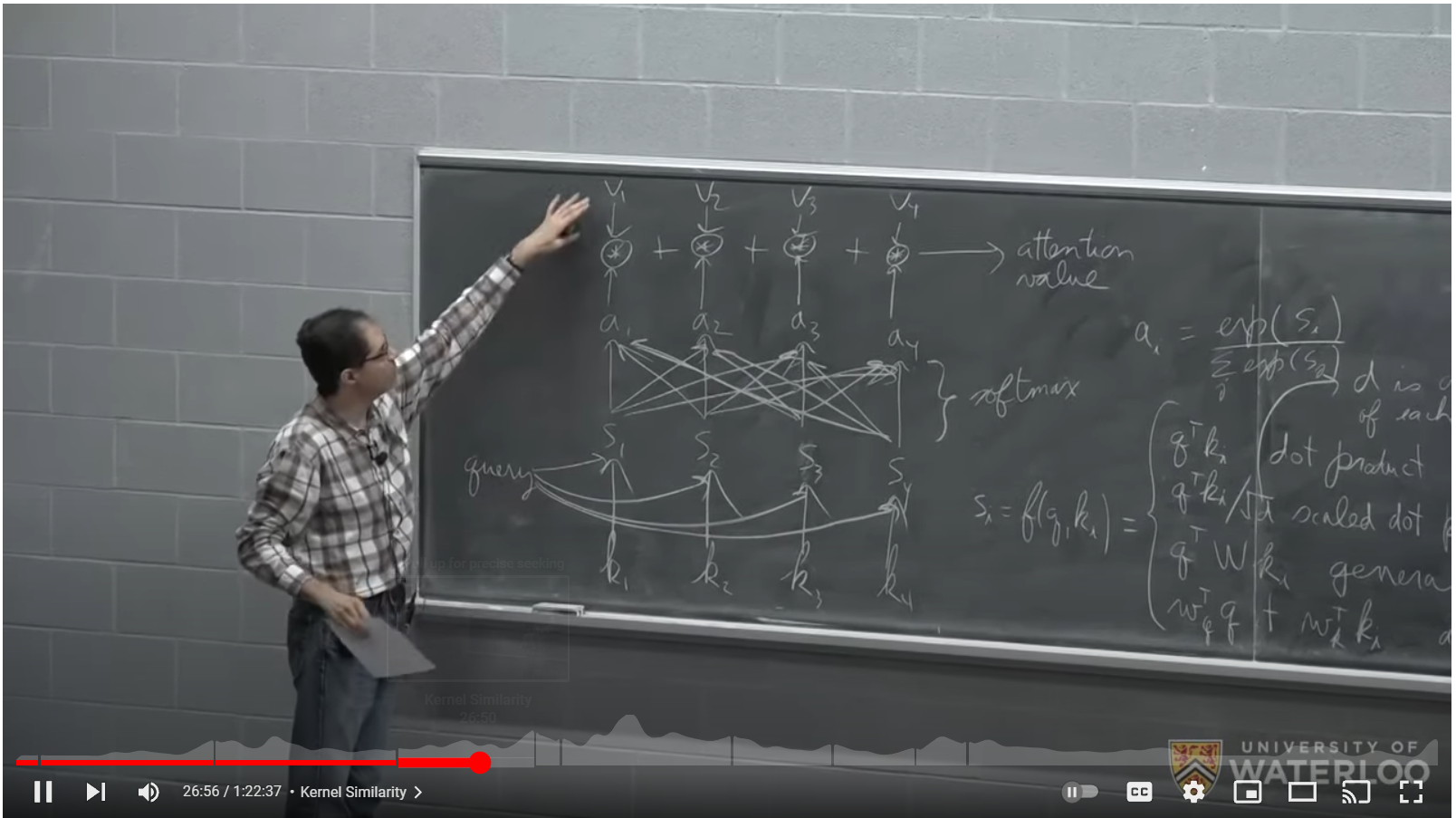

To understand the key component of the GPT pipeline, the transformer, we recommend this lesson of Pascal Poupart at the University of Waterloo. The video explains in detail how the transformer component works and which properties it has.

CS480/680 Lecture 19: Attention and Transformer Networks

2. ChatGPT in scientific literature

There is still no good book on ChatGPT, the best way to gain further understanding is to read through this collection of scientific papers in chronological order:

1. Bengio et al, 2003, A Neural Probabilistic Language Model

It shows a shared matrix C over the input tokens to project the tokens in a ~30 dimensional space in fashion similar to word2vec. Then there is some computation with a feed forward vector and a softmax layer that calculates the probability for the next token, which is chosen via a multinomial distribution sampling process.

2. Bengio et al, 2015, Neural machine translation by jointly learning to align and translate

It features a Recursive Neural Network Encoder-Decoder to translate from one language to another

3. OpenAI Team, 2017, Attention is all you need

It replaces the Recursive Neural Network of [2] with a new neural network component called the Transformer. It describes in detail the attention process, how it can be done in parallel with multi-head attention, and the need to mask the attention mechanism on the decoder part, so that information from previous tokens flows only to the next tokens and not vice versa. It describes how to superpose positional embeddings to the tokens.

4. Alec Redford and OpenAI Team, 2018, Improving language understanding by Generative Pre Training

The encoder part of [3] is dropped, to go from a model to do translations to a model that generates the probability for the next token. GPT-1 is born. It is a 12 layer decoder with 12 masked self-attention heads using 768 dimensions. It has 117 milion parameters. It introduces the concept of supervised fine tuning to adapt the network to tasks like Natural Language Inference, Question Answering, Semantic Similarity and Classification.

5. Alec Redford and OpenAI Team, 2019, Language Models are Unsupervised Multitask Learners

This paper introduces GPT-2 and shows its capabilities. It has 1.5 billion parameters. It is observed that large networks are more capable at the tasks described in [4].

6. OpenAI Team, July 2020, Language Models are Few Shot Learners

This paper describes the evolution from GPT-2 to GPT-3 which now has 175 billion parameters. 96 layers with 96 heads with a head dimensionality of 128 produces what we know as ChatGPT, currently available at https://chat.openai.com/.

GPT3 has a context window of 2048 tokens (~ 1500 words). With GPT-3.5 the context window was increased to 4096 (3000 words of english text).

The total train operations to compute the parameters is given as 3.14E23 flops. From this number, assuming 10 Teraflops GPUs, we estimate that the training of GPT-3 required a cluster of about 4'000 GPUs calculating full time for 3 months. It introduces also the concept that by giving 1-3 examples (shots) to the model as context, the model behaves better.

7. OpenAI Team, 2023, GPT-4 Technical Report

GPT-4 has the context window increased to 8192 tokens and has the capability to receive images in input. The paper describes what the model can do and how it behaves on several common tests, but does not disclose any numbers on the model size or the training time needed to calculate it.

8. Another cool source to learn about ChatGPT is: just ask ChatGPT 3.5 about how it works! A Google account is required to login, but it's free. At best ask about "complex chatbots" because using "ChatGPT" in the question triggers some kind of standard answer.

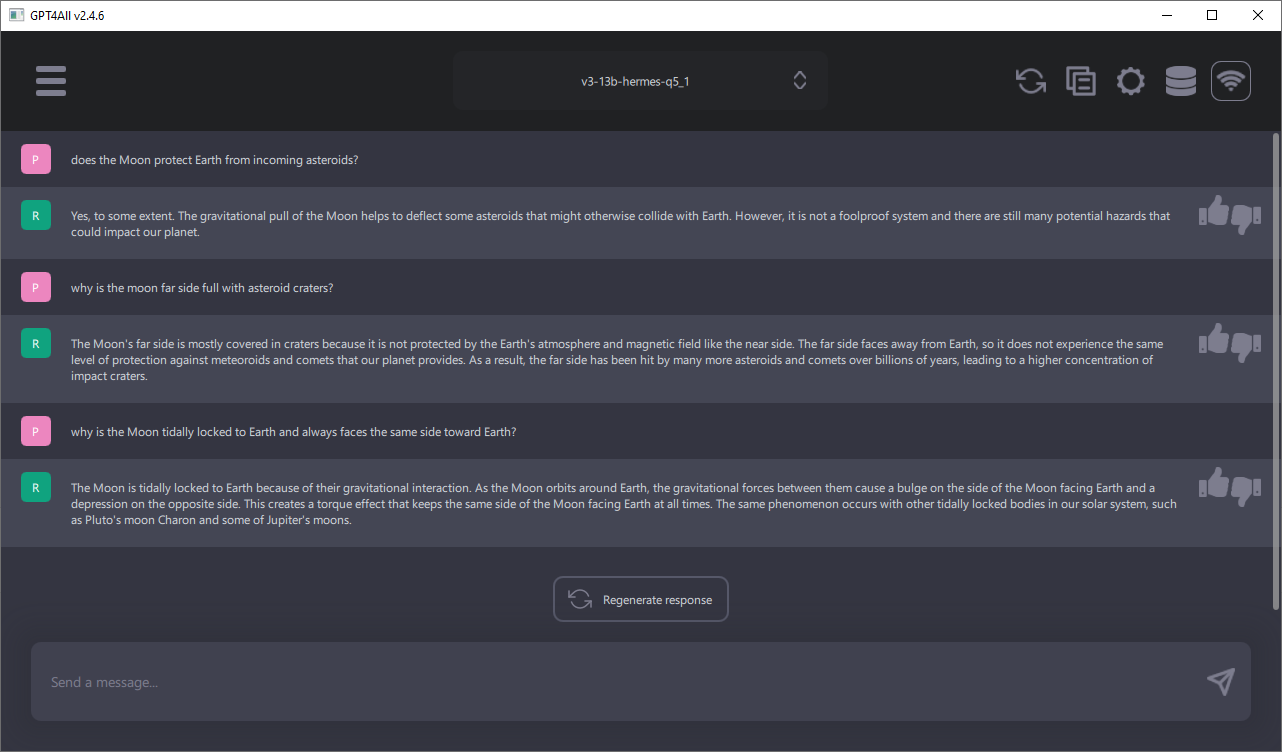

3. Execute ChatGPT on your local computer

The best Open Source project to follow is GPT4All. It is a complete chatbot that runs locally on your computer where is is possible to select among various models between 4 billions and 13 billions of parameters. RAM required is 16 GB for the larger models with 13 billion parameters. Smaller but faster models in generating responses with 7 billion parameters require only 8 GB RAM. There are installers for Windows, Linux and Mac OS X. Each week there are updates to the software or to the available models.

4. Emergent Behaviour

Here we asked ChatGPT 3.5, available online by OpenAI, on a neat feature of chatbots: for example they are able to translate in languages they did not learn or they are able to create source code in several computer languages:

User> What is the definition of "emergent behaviour" a phenomenon we observer in complex chatbots?

ChatGPT 3.5> "Emergent behavior" refers to the phenomenon where a complex system, such as a chatbot, exhibits behavior or properties that are not explicitly programmed or designed into it. Instead, these behaviors arise spontaneously as a result of interactions and relationships among the components of the system.

In the context of chatbots, emergent behavior can manifest as unexpected or unpredictable responses, creative or novel solutions to problems, or the ability to simulate human-like conversations. These behaviors emerge from the interactions between the underlying algorithms, machine learning models, and the data they are trained on.

Chatbots rely on various techniques, such as natural language processing, machine learning, and deep learning, to understand and generate human-like responses. They are often trained on large datasets of conversations, allowing them to learn patterns, language structures, and context. However, due to the complexity of language and the diversity of user inputs, chatbots may exhibit emergent behavior that goes beyond their initial training.

For example, a chatbot might learn to generate witty or humorous responses, even if it was not explicitly programmed to be funny. This emergent behavior arises from the chatbot's ability to identify patterns in the training data and generate creative or unexpected outputs.

Emergent behavior in chatbots can be both advantageous and challenging. On one hand, it can enhance user experience by making conversations more engaging and natural. On the other hand, it can also lead to unpredictable or undesirable responses, such as biased or offensive statements, if the training data contains such patterns.

To mitigate potential issues, developers and researchers continuously refine and improve chatbot algorithms, conduct rigorous testing, and employ techniques like human review and feedback loops to ensure that emergent behavior aligns with desired outcomes and ethical considerations.

5. Size of GPT 3-5 versus human brain size

We know for sure in the scientific paper about GPT-3 that the number of parameters in GPT 3-5 si around 175 billions. The number of parameters in an artificial neural network is the number of mathematical neurons (each neuron has a bias parameter) plus the number of connections between neurons (the weights in the W matrices). If we assume that the dimensionality of the model (d=12'288 for GPT 3.5 [6]) is the average number of connections between neurons, then by solving this simple equation x + 12288x = 175E9, we can speculate that GPT 3-5 has therefore x=14.2 million mathematical neurons.

Now let's get audacious and advocate that a mathematical neuron might be as powerful as a biological neuron, which nobody knows so far, we assume here that at least they seem to perform similar tasks.

The human brain according to Wikipedia has about 86 billion neurons and each neuron has at least 10'000 and up to 100'000 synapses which are connections between neurons. Let's get audacious again and assume that 12'000 is close to the average number of the synapses of the brain and the GPT 3-5 neurons with 12'288 connections are comparable with the human brain neurons also in regard to the average number of connections as the dimensionality of the GPT pipeline is the number of connections between GPT neurons.

After all these assumptions, we can directly compare the number of mathematical neurons in GPT 3-5 (14.2E6) and the number of biological neurons in the human brain. By computing the ratio of the two numbers, we see that GPT-3.5 is about 6'000 times smaller than the human brain.

Let's further speculate that each year the number of mathematical neurons in large language models like GPT 3-5 can be doubled each year by advances in hardware and software and that the neurons can be trained in reasonable time, say always under the year in which the doubling of neurons occurs. In such a scenario latest in 13 years we should be able to get an artificial brain the size of the human brain (log_2(6000) = 12.55).

- Details

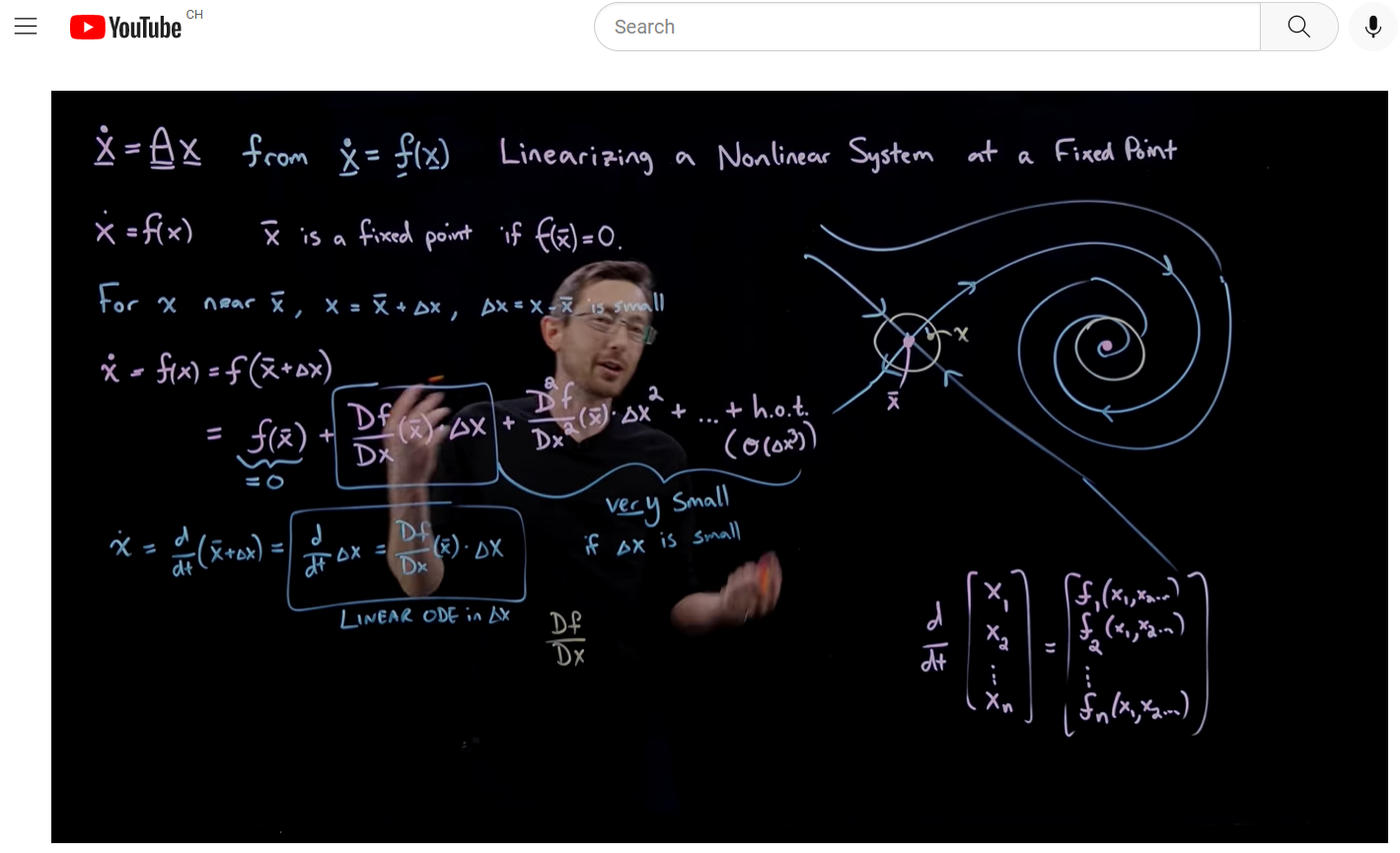

We recommend following Youtube Videos by Steven L. Brunton, professor of the University of Washington in Seattle, nicknamed Eigensteve, to brush up your math skills:

- Differential Equations and Dynamical Systems

Topics covered include: Ordinary Differential Equations, Charasteristic Equation, Higher Order ODEs, From a Higher Order ODE to a System of First Order ODEs in Matrix Form, Meaning of Eigenvalues and Eigenvectors in an ODE system, Jordan Canonical Forms, Linearizing non Linears DEs, Jacobian Matrix, Phase Portrait of Nonlinear DEs using Taylor expansion around center fixed points, how to choose DeltaT in computer simulations, Discrete Riemann Integration, Runge Kutta Integration for DEs. 49 videos, ~25 hours - Complex Analysis

Euler's Formula, Analytic Functions of a Complex Variable, the Complex Logarithm Log(z), Cauchy-Riemann conditions solve Laplace Equations, Integrals in the Complex Plane around Singularities. 13 videos, ~7 hours - Vector Calculus and Partial Differential Equations

Div, Grad, Curl as building blocks to solve PDEs, Gauss Divergence Theorem, Continuity Equation, a PDE for mass conservation, Stokes Theorem and Green's Theorem, Solving for the Heat and Wave Equation. 23 videos, ~8 hours - Reinforcement Learning

Discrepancy Modeling with Physics Informed Machine Learning, Neural Networks meet Control Theory, Q-Learning. 9 videos, ~4 hours

- Details

Deep Space created the new website for the local boy scout society, APE Poschiavo. It also recovered existing content from the old website which was incorrectly migrated from the previous Internet provider. You can visit the new site here.

- Details

On course.fast.ai there is a good online course to refresh your knowledge about Deep Learning.

Topics offered are how backpropagation is implemented in PyTorch using inline gradients, collaborative filtering using embedded techniques, working of convolutional neural network layers, tabular analysis with random forests and gradient accumulation to reduce memory footprint of GPUs.

For many topics there is an example in Excel that really explains what is going on under the hood of a complex deep learning model.

Last lesson is about Data Ethics and explains how through feedback loops implemented in social media our society got more polarized in important discussions.

There is a book for the course, which we recommend as well:

- Details

Gridcoin has just had a successful protocol update to version 5! Before version 5, it was mandatory for a cruncher to switch to the team Gridcoin, in order to be rewarded with this cryptocurrency.

From now on, any BOINC cruncher, regardless of team, can earn Gridcoin for their work.

Solo cruncher must send a new beacon within the next 2 weeks to not lose any past unclaimed rewards.

The BOINC community is about 10 times the Gridcoin community. It is believed that the removal of the Team Requirement should spark interest among BOINC crunchers for Gridcoin.

Page 1 of 4