Porting software to Docker and Kubernetes

deep space computing AG is able to "dockerize" any existing application and to port it running into a Kubernetes cluster.

A docker was a labourer who moved commercial goods into and out of ships when they docked at ports. There were boxes and items of different sizes and shapes, and experienced dockers were prized for their ability to fit goods into ships by hand in cost-effective ways. Hiring people to move stuff around was not cheap, but there was no alternative. If a ship broke, every single item had to be unloaded and reloaded by hand.

Modern ships are now designed to carry, load and unload predictably shaped items more efficiently. The shaped items are nothing else than the containers we know. Only one docker is needed to operate machines designed to move containers. The single container has different items in it. The container is loaded only once in an effective matter, when it starts its journey. It doesn't matter to the carrier ship what is inside it. The container can be easily loaded elsewhere, even on trucks and trains.

In similar way it is now possible to deploy software on Linux and Windows computers as containers with all their libraries and requirements packaged in one container to several computers (ships in the analogon), no matter what their operating system is and which libraries it has installed.

Docker is a lightweight virtualization technology where only filesystem and network are virtualized, but not the operating system kernel. Typically a host computer powerful enough to run 20 traditional virtual machines can run up to 400 Docker containers.

Kubernetes is the technology that builds up on Docker ideas: it allows to specify a cluster as it would be an XML file (a YAML file to be precise, Yet Another Markup Language...). If one host goes down due to malfunction, Kubernetes is able to restart the containers which failed on another working host computer.

Additionally, Kubernetes and Docker require existing monolithic applications to be rewritten as Microservices, a set of software development rules that rearrange an application as loosely coupled services.

Services

These are the range of services offered by deep space computing AG:

- Software Development: creating turn-key solutions with development following best practices, using Object Oriented Programming Model and well documented. Training of users.

- Software Consultancy: analyzing customer needs, providing improvement strategy, indipendent opinion from experts in the field.

- Organizing Seminars on State of the Art Technologies

- Selling CUDA Supercomputers tailored to customer needs

- Porting existing software to Kubernetes Clusters based on Docker container technology

Computational Cluster

The company runs a Computational Cluster with the following specifications:

| PCs | 11 |

| CPUs | 120 |

| Graphic Cards | 3 |

| CUDA Cores | 14'080 |

| Tensor Cores | 400 |

| Estimated Computational Speed | 46.7 Teraflops (Peak) |

| Operating System |

Ubuntu Linux 24.04 / Windows 11 |

| Entropy Sources |

one thermal from semiconductor junction and one radio noise from ionosphere |

| Other Hardware | one Xilinx FPGA |

| Cooling | 1 air fan |

| Environmental Monitoring | Homematic system: among others smoke detection |

| Running since | 01.07.2017 |

| Power Consumption | 1.3 kW |

About half of the computers in the cluster are CUDA Supercomputers. The cluster is partially configured with Kubernetes.

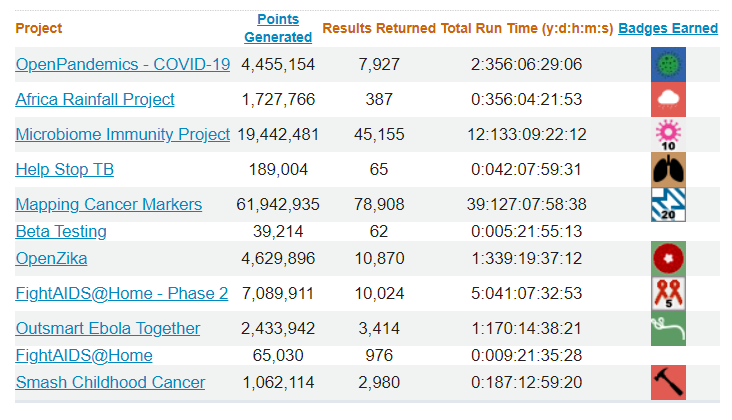

When the cluster is not performing calculations for customers, it volunteers computer cycles for the BOINC distributed computing network:

Statistics are updated daily. Click on the image above to view more statistics about the cluster.

Here you find statistics for folding@home were we fight against coronavirus.

Below you can see our statistics for the World Community Grid project, which helps to find cures to common diseases: